Supersize me. Or: When smartphones just get too big...

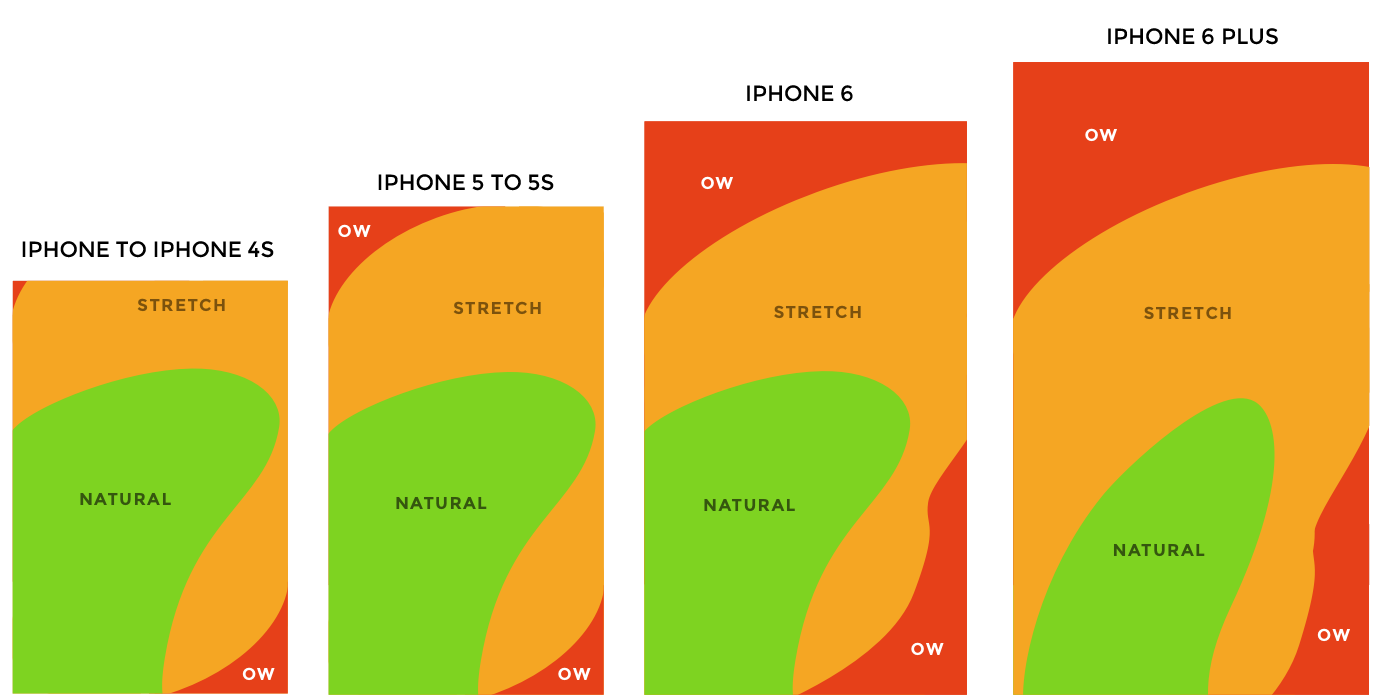

Now you have your absurdly large phone and want to reach the topmost corner of the screen to open a menu. Seems familiar? My iPhone 6 almost slipped my hand a couple of times trying to do this, while holding it in one hand. Of course Apple has proposed a solution for this with Reachability and the double click gesture on the home button. But, you have to enable it in Settings and overwrite other possibly useful home button gestures with it. Also, there is another issue to consider, swipe ambiguity: when the same swipe gesture means different things in different apps. Sometimes the left to right swipe opens the off-canvas menu, other times it takes you back to the previous screen.

These little difficulties led me to work on an idea, that let's you basically interact with elements at the top of the screen without the need to touch them directly. In rethinking the current swipe patterns, I came up with this solution, which might have a short learning phase, but after that should be very easy to use in any application.

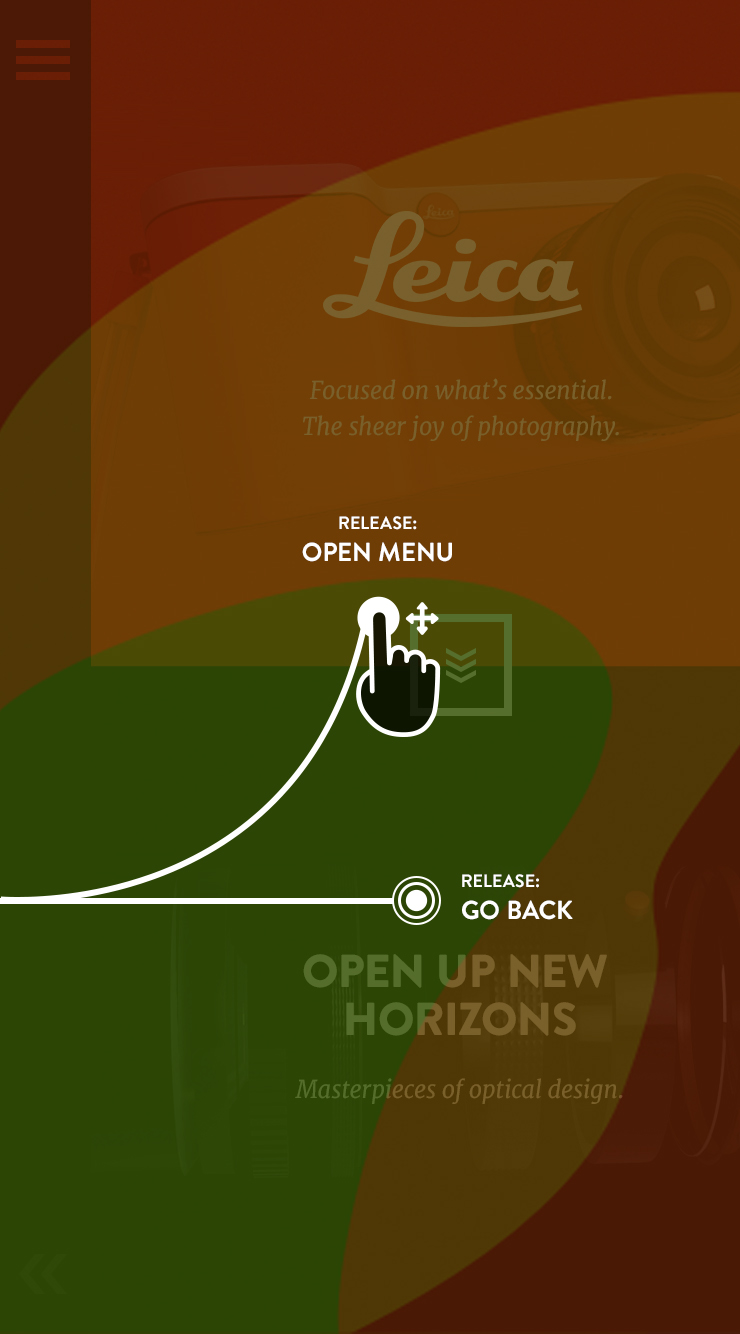

The basic premise is that every screen is split into certain interaction areas. Depending on where you release your swipe gesture, a different event is triggered. In this example it is implemented for the "menu" and "back" actions.

The possible target is highlighted through a short UI animation during the swipe interaction.

This animation illustrates:

- Release in the upper half of the screen -> open the menu

- Release on the lower half -> go back to the previous screen.